Neuromorphic and In-Memory Computing Architectures are emerging as one of the most disruptive forces in modern semiconductor and AI hardware design. As artificial intelligence workloads continue to grow exponentially, conventional computing models struggle with power consumption, latency, and data movement inefficiencies. This is where Neuromorphic and In-Memory Computing Architectures offer a fundamentally new approach, inspired by how the human brain processes information.

This blog explores how these architectures work, why they matter, where they are being adopted, and how they are shaping the future of AI hardware, edge computing, and the semiconductor industry.

What are Neuromorphic and In-Memory Computing Architectures?

Neuromorphic and In-Memory Computing Architectures refer to advanced computing paradigms designed to overcome the limitations of traditional von Neumann systems.

Neuromorphic computing emulates biological neural systems by using artificial neurons and synapses that communicate through discrete electrical spikes. These systems rely on spiking neural networks, event-driven processing, and adaptive learning mechanisms that closely resemble human cognition.

In-memory computing, on the other hand, integrates computation directly within memory structures. Instead of constantly transferring data between memory and processors, calculations are performed where the data resides, dramatically reducing energy consumption and latency.

Together, Neuromorphic and In-Memory Computing Architectures enable intelligent systems that are faster, more energy-efficient, and capable of real-time learning.

Why Neuromorphic and In-Memory Computing Architectures Matter Today

The rapid expansion of AI, edge devices, and data-intensive applications has exposed critical bottlenecks in traditional computing architectures. Power-hungry GPUs and CPUs are inefficient for continuous, real-time inference at the edge.

Neuromorphic and In-Memory Computing Architectures address these challenges by:

- Reducing data movement, the largest contributor to energy loss

- Enabling ultra-low-power AI inference

- Supporting real-time, event-driven decision making

- Scaling efficiently beyond Moore’s Law constraints

For industries such as healthcare, automotive, robotics, and smart infrastructure, these architectures are becoming strategically essential rather than experimental.

How Neuromorphic Computing Works

Neuromorphic systems are built around spiking neural networks, where information is encoded in the timing of spikes instead of continuous numerical values. Computation occurs only when events happen, eliminating unnecessary processing.

Key characteristics include:

- Event-driven and asynchronous operation

- Artificial neurons and synapses implemented in silicon

- On-chip learning using synaptic plasticity rules such as spike-timing dependent plasticity

- Analog and mixed-signal circuit design

This brain-inspired approach allows neuromorphic processors to achieve extremely high energy efficiency while adapting to dynamic environments.

How In-Memory Computing Works

In-memory computing fundamentally changes how computation is performed. Instead of moving data from memory to a processor, operations such as matrix-vector multiplication occur directly inside memory arrays.

Key techniques include:

- Compute-in-memory using ReRAM, MRAM, and phase-change memory

- Analog in-memory computing for massively parallel operations

- Digital in-memory computing optimized for AI workloads

By minimizing data transfer, in-memory computing significantly improves performance per watt, making it ideal for AI accelerators and edge AI hardware.

Core Technologies Powering These Architectures

Neuromorphic and In-Memory Computing Architectures rely on several foundational technologies:

Spiking Neural Networks

Spiking neural networks enable time-based information processing and are central to neuromorphic computing.

Emerging Memory Devices

Memristors, ReRAM, MRAM, and phase-change memory act as both storage and compute elements, enabling compute-in-memory operations.

Crossbar Memory Arrays

Analog crossbar architectures allow parallel vector-matrix operations, critical for neural network inference.

3D Stacking and Chiplet Integration

Advanced packaging techniques reduce latency and improve scalability by tightly integrating logic and memory.

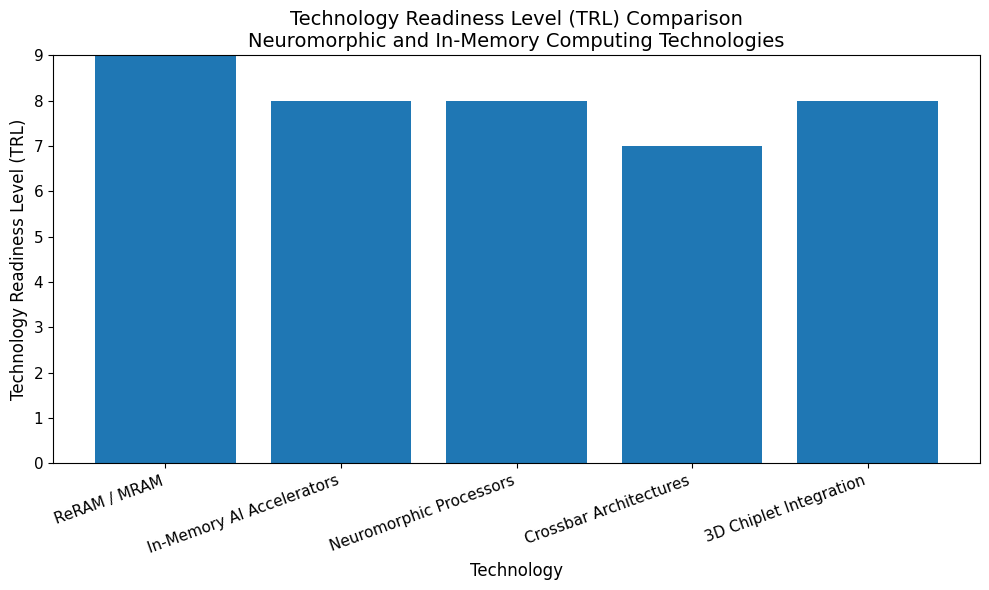

Technology Readiness and Commercial Maturity

The maturity of Neuromorphic and In-Memory Computing Architectures varies by component:

- Memory devices such as ReRAM and MRAM have reached full commercial readiness

- Neuromorphic processors are in early commercial deployment

- Crossbar-based compute arrays are transitioning from prototype to product

- 3D chiplet integration is rapidly scaling in advanced semiconductor nodes

This mixed readiness reflects a healthy innovation pipeline with both near-term and long-term opportunities.

Leading Companies Driving Innovation

Major semiconductor and AI hardware companies are investing heavily in Neuromorphic and In-Memory Computing Architectures, recognizing their strategic importance for next-generation AI systems, edge computing, and energy-efficient hardware.

Established Industry Leaders

Established leaders include Intel, IBM, Samsung, Qualcomm, TSMC, SK hynix, and Micron, all of which are building foundational neuromorphic and in-memory computing platforms while securing strong, long-term intellectual property positions.

Other major global semiconductor and technology companies actively advancing neuromorphic computing, in-memory computing, or AI-in-memory architectures include:

- Advanced Micro Devices (AMD)

- NVIDIA (near-memory and AI-accelerator research)

- Apple (on-device and memory-centric AI architectures)

- Huawei (Ascend AI and memory-driven compute systems)

- Renesas Electronics

- Infineon Technologies

- Panasonic

- Western Digital

- Fujitsu

- GlobalFoundries

- ASE Technology Holding

- Amkor Technology

- CEA-LETI

Together, these organizations are shaping manufacturing processes, advanced packaging, memory technologies, and large-scale deployment strategies for neuromorphic and in-memory computing hardware.

Startups and Emerging Innovators

Innovative startups are playing a critical role in accelerating commercialization, particularly for low-power and edge AI use cases. Key players include:

- BrainChip (Akida neuromorphic processors)

- Innatera Nanosystems (ultra-low-power neuromorphic chips)

- SynSense (event-based vision and audio processors)

- Mythic (analog compute-in-memory accelerators)

- Knowm (memristive and adaptive computing systems)

- MemryX (edge AI inference accelerators)

- SEMRON (digital in-memory computing)

- d-Matrix (in-memory AI accelerators for data centers)

- TetraMem (resistive memory-based computing)

- 4DS Memory (ReRAM technology)

- Weebit Nano (embedded ReRAM solutions)

- Crossbar Inc. (ReRAM and storage-class memory)

Collectively, these startups are pushing Neuromorphic and In-Memory Computing Architectures from research into real-world products, expanding adoption across edge devices, automotive systems, robotics, and next-generation AI platforms.

Academic and Research Ecosystem

Universities and research consortia play a critical role in advancing Neuromorphic and In-Memory Computing Architectures. Leading research hubs include the University of Manchester, Harvard University, Queen Mary University of London, the University of Texas at San Antonio, Radboud University, and major European research institutes.

These institutions contribute breakthroughs in materials, device physics, architectures, and training algorithms.

High-Value Applications

Neuromorphic and In-Memory Computing Architectures enable applications that are difficult or impossible with conventional hardware:

- Edge AI and IoT devices with always-on intelligence

- Healthcare wearables for real-time biosignal monitoring

- Autonomous vehicles requiring ultra-low latency sensor fusion

- Robotics with adaptive perception and control

- Cybersecurity systems performing real-time anomaly detection

- Smart city infrastructure for traffic and energy optimization

These use cases highlight the shift toward intelligent systems operating closer to the data source.

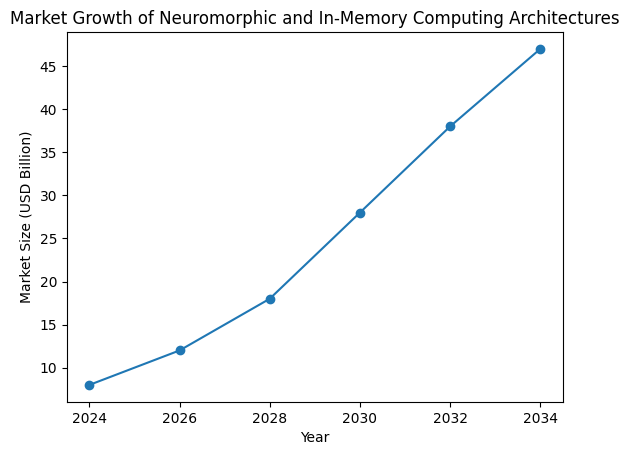

Market Outlook

The market for Neuromorphic and In-Memory Computing Architectures is expected to grow rapidly over the next decade. Analysts project strong double-digit growth driven by edge AI adoption, energy efficiency mandates, and demand for scalable AI hardware.

By 2030, these architectures are expected to play a central role in next-generation AI accelerators, autonomous systems, and low-power computing platforms.

Challenges and Adoption Barriers

Despite strong momentum, challenges remain. Hardware-software co-design complexity, limited foundry support for emerging memory technologies, and the need for standardized training frameworks continue to slow adoption.

However, increasing industry collaboration, standardization efforts, and investment are steadily reducing these barriers.

The Road Ahead

Neuromorphic and In-Memory Computing Architectures represent a long-term shift rather than a short-lived trend. As AI moves from centralized data centers to the edge, the demand for energy-efficient, adaptive, and scalable hardware will only increase.

Organizations that invest early in research, partnerships, and intellectual property will be best positioned to lead this transformation.

Conclusion

Neuromorphic and In-Memory Computing Architectures are redefining how intelligence is implemented in silicon. By combining brain-inspired processing with memory-centric design, they offer a practical path toward sustainable, high-performance AI.

For technology leaders, startups, researchers, and IP strategists, this is a pivotal moment. The convergence of neuromorphic computing and in-memory computing is not just improving existing systems—it is shaping the future of intelligent machines.

How PatentsKart Can Help

PatentsKart supports innovators, startups, research institutions, and enterprises working on neuromorphic and in-memory computing by providing end-to-end intellectual property and technology intelligence services. From patent landscape analysis and freedom-to-operate (FTO) studies to high-quality patent drafting, filing, and licensing strategy, PatentsKart helps organizations protect core innovations, reduce IP risk, and build strong competitive positions in this rapidly evolving domain.

FAQ’s

-

What are Neuromorphic and In-Memory Computing Architectures?

Neuromorphic and In-Memory Computing Architectures are advanced computing models that improve AI efficiency by mimicking brain-like processing and performing computation directly inside memory. They reduce data movement, lower power consumption, and enable fast, real-time intelligence for modern AI applications.

-

How do Neuromorphic and In-Memory Computing Architectures differ from traditional computing?

Traditional computing separates memory and processing, causing high energy and latency costs. Neuromorphic and In-Memory Computing Architectures eliminate this bottleneck through event-driven processing and memory-centric computation, resulting in faster AI inference, lower power usage, and improved scalability.

-

Are Neuromorphic and In-Memory Computing Architectures available commercially?

Yes. Several neuromorphic processors and in-memory AI accelerators are commercially available today, especially for edge AI and low-power systems. While some advanced implementations are still emerging, the technology has moved beyond research into real-world deployment.

-

Why are Neuromorphic and In-Memory Computing Architectures important for the future of AI?

They are critical because they enable scalable, energy-efficient AI as intelligence moves from data centers to edge devices. Neuromorphic and In-Memory Computing Architectures support always-on AI, real-time learning, and high-performance inference without excessive power or thermal constraints.